Autonomous Vehicles, Self-Driving Cars and Liability: Are Manufacturers or Drivers to Blame?

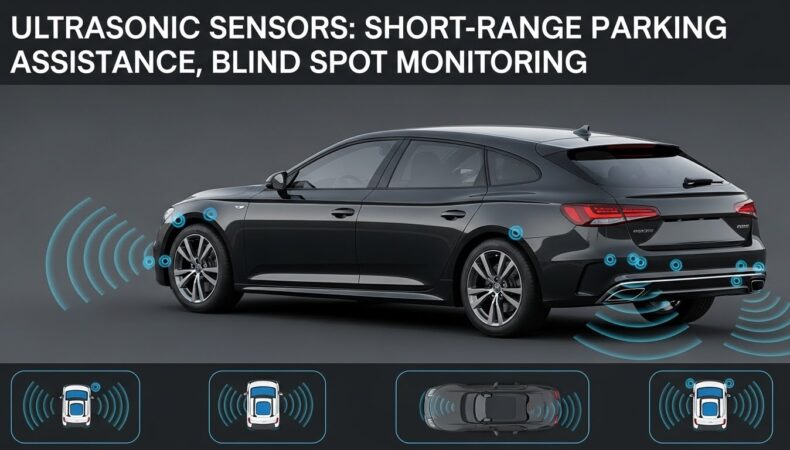

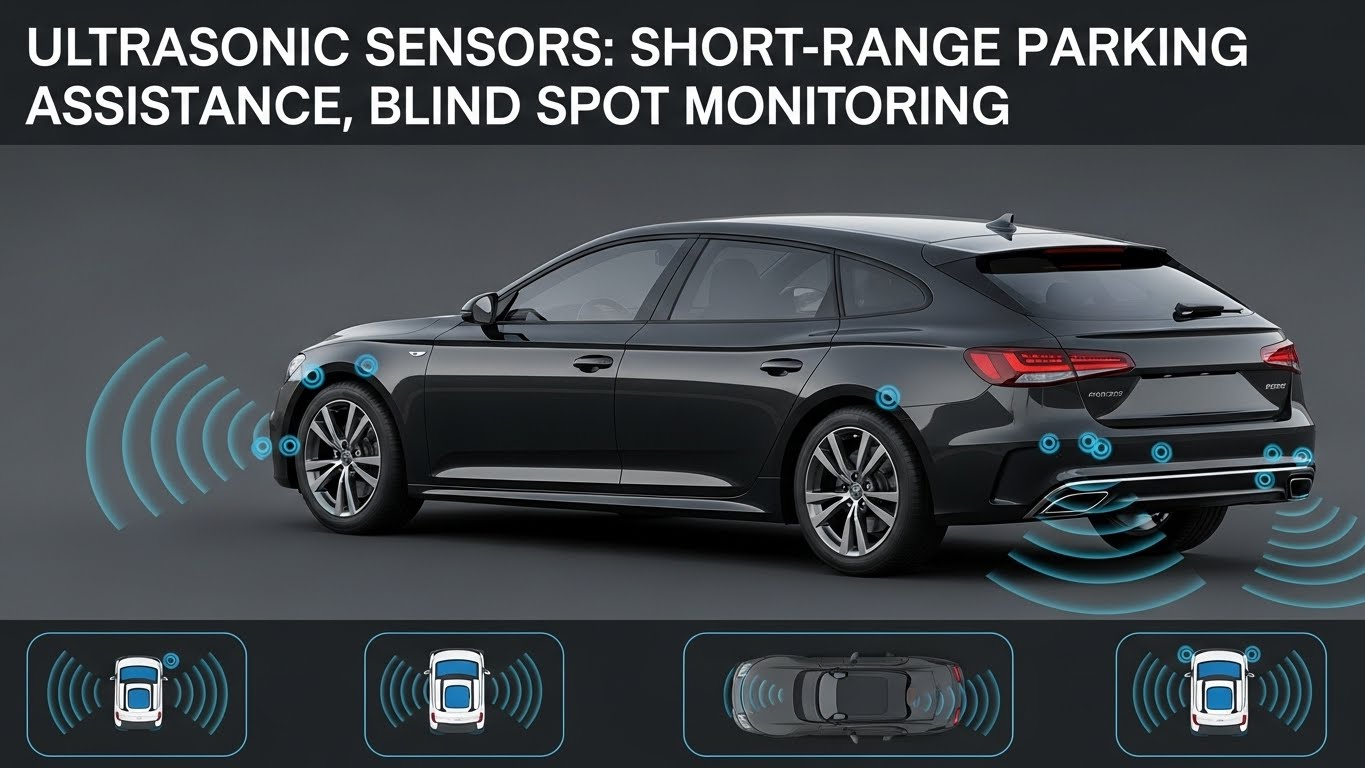

Autonomous vehicles are no longer science fiction. Self-driving features from brands like Tesla, Waymo, Mercedes-Benz, and General Motors are already operating on public roads. These vehicles depend on cameras, radar, LiDAR, GPS, and artificial intelligence to make split-second driving decisions.

But as self-driving technology grows, so do the legal questions. When a crash occurs, who is responsible? Is it the human behind the wheel—or the manufacturer who programmed the artificial intelligence? Laws are still evolving, and every accident brings new challenges. This guide breaks down how liability works, who can be held responsible, and what victims need to know after an autonomous vehicle crash.

For more accident-related guides, explore our resources such as What Is Negligent Security? and Injuries Caused by Defective Vehicles.

Understanding the Levels of Vehicle Automation

The Society of Automotive Engineers (SAE) defines five levels of vehicle automation. Liability often depends on which level the vehicle was operating under at the time of the crash:

- Level 0: No automation – the human drives.

- Level 1–2: Driver assistance – the human monitors driving.

- Level 3: Conditional automation – car handles tasks but human must intervene.

- Level 4: High automation – car drives itself but only in certain areas.

- Level 5: Full automation – no steering wheel or human input needed.

Most self-driving systems on public roads today (including Tesla Autopilot and GM Super Cruise) are Level 2 or Level 3, meaning the driver must remain alert. Manufacturer liability increases as the automation level increases.

Key Question: Who Is in Control at the Moment of the Crash?

Liability depends heavily on whether the human or the AI was controlling the vehicle at the time of the collision.

If the human was controlling the car:

The accident is treated like a traditional car crash. The driver may be responsible if they:

- Were distracted

- Failed to brake

- Misjudged distance or speed

- Ignored safety warnings

If the self-driving system was controlling the car:

The manufacturer or software developer may be to blame if the crash involved:

- Sensor malfunctions

- Faulty AI decision-making

- Failure to detect pedestrians or cyclists

- Navigation errors

- Software defects

In these cases, the claim may turn into a product liability lawsuit. You can learn more in our guide Types of Product Defects.

Common Causes of Self-Driving Car Accidents

Even advanced autonomous systems can make mistakes. Some of the most common causes include:

- Object detection failures – the AI may misread trucks, road signs, or small obstacles.

- Lane-keeping errors – improper lane centering or drifting.

- Overreliance on automation – drivers assume the car can handle more than it actually can.

- Software bugs or outdated updates – AI may behave unpredictably.

- Poor weather – rain, fog, and snow reduce sensor accuracy.

Many crashes occur because drivers think the car can operate without supervision, even though current technology requires constant human involvement.

When the Driver Is Liable

A driver may be at fault if:

- They misused the autonomous features

- They failed to take control when required

- They ignored warnings or fell asleep

- They used the system in inappropriate conditions

Courts often consider whether the driver acted reasonably based on the manufacturer’s instructions. If the driver disregarded clear safety warnings, liability may shift back to them.

When the Manufacturer or Developer Is Liable

Manufacturers can be held responsible when defects in the autonomous system contribute to the crash. These cases often fall under defective pr

Last modified: December 11, 2025